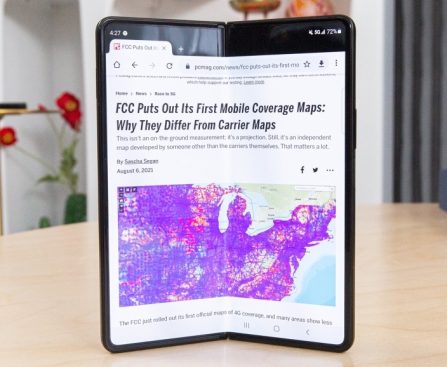

The newest release of Android 16 QPR3 Beta 2 introduces notable enhancements to the System Settings interface, making navigation more user-friendly. This update categorizes related system settings under more defined sub-headings, improving user experience with a clearer and more intuitive layout. Previously, the System Settings displayed a lengthy list of options with minimal visual distinction, which could be daunting for users searching for specific settings.

With the latest update, settings are organized into clear sections, such as “Interaction” for keyboard, gestures, and navigation mode, and “Update device” for system updates. This reorganization enables users to swiftly locate and access the settings they require without scrolling through a lengthy list. The enhanced layout also works well with the existing search feature, facilitating easier discovery of specific settings.

This update is part of a larger initiative by Google to improve the stability and functionality of its operating system. In addition to the revamped System Settings, Android 16 QPR3 Beta 2 includes a thorough list of bug fixes and stability enhancements for Pixel devices. These fixes tackle issues such as severe system crashes, notification shade problems, charging limit concerns, and sluggish Wi-Fi connectivity.

In summary, the update seeks to deliver a smoother and more user-centric experience for Android users, especially those with Pixel devices. The alterations to the System Settings interface are a valuable enhancement, simplifying the process for users to personalize their devices and effectively manage system settings.